Short history of OSI

This post is based on the article “The Internet that wasn’t” by Andrew L. Russell [Russell13] published in IEEE Spectrum in 2013. The author is Assistant Professor of History at the Stevens Institute of Technology, N.J., USA and he wrote a prior article in 2006 about OSI history [Russell06] and felt that it has to be updated in 2013. The aim of this post is just to present a (telegraphic) summary, so if you are really interested in the subject I recommend you to read the original articles.

Prof. Russell was enticed to update the information after receiveing several emails from OSI (Open System Interconnection standard) veterans complaining about the way he pictured OSI in [Russell06]. He wrote a book in 2014 telling the story of OSI [Russell14].

Introduction

By the end of the 1970s a group of computer industry representatives from France, U.K. and U.S.A. started developing a computer network standard. This standard was called OSI: Open Systems Interconnection. Their goal was to enable global exchange of information. In 1980 thousands of engineers were involved in this project and OSI seemed a reality. However, in 1990 OSI was stalled and TCP/IP was adopted as the standard for world wide computer communications.

This story goes back to the 1960s.

1960

In this era computer communications was a hot research topic. Packet switching arises, being Paul Baran (Rand Corporation, USA), and Donald Davies (National Physics Lab, UK) the leaders supporting this technique. The idea is that data is decomposed in discrete blocks (packets) that are routed separately and the receiver reassembles the packet to obtain the original message. This was more efficient than circuit switching, that was based in predetermining the whole path between transmitter and receiver.

In 1969, researchers from DARPA (Defense’s Advanced Research Projects Agency) created the first packet-switched network: ARPANET. IBM and European telephone monopolies started similar projects. IBM mimicked circuit switching with the so-called virtual circuits, reusing established technology. Thus, constraining the development packet switching.

1970

In 1972 the International Network Working Group (INWG) was created to standardize packet switching. The parties were USA, UK and France. Some active members were Vint Cerf (USA, first chairman of INWG) and Louis Pouzin (France).

Pouzin – leader of Cyclades, the French packet switching project – proposed the idea of the datagram: packets are sent without creating a connection. INWG supported the datagram.

In 1974 Cerf and Robert Kahn (DARPA) published the basis of the Internet elaborating on the ideas of Pouzin [Cerf74].

In 1975 the network protocol was submitting to CCITT (Consultative Committee for International Telegraphy and Telephony), but it was rejected. INWG blamed circuit switching supporters for the negative response. In this very year (1975) Cerf leaves for DARPA and there he works with Bob Kahn.

In 1977 UK proposed to ISO (International Standardization Organization) the creation of a standard for packet switching. This idea was supported by France and USA. The goal was to interconnect any kind of computer. This standard would supposse to break the monopoly from big companies. That was the creation of the “Open Systems Interconnection”: OSI.

Charles Backman (database expert) was the committee chairman. He proposed a model based on IBM Systems Network Architecture. However, OSI supported heterogeneity, and this was most welcomed by many companies with many different computing systems: General Motors.

Pouzin abandoned French packet switching network after struggling to get funding from the French government in 1978.

OSI model was based on layers, which enabled modularity, thus, there were different committees and working groups for each layer. In order to become an international standard it was necessary to fulfill with the following ISO’s 4-step process:

- Working draft

- Draft proposed international standard

- Draft international standard

- International standard

The first plenary meeting was held on the 28th of Feb. 1978. 10 countries and observers from international organizations attended. IBM managed to convinced OSI to include many of their business interests.

OSI forged and alliance with CCITT, so… datagram vs virtual circuits, again! Both points of views were included in OSI’s complexity. Chronicle of a death foretold: lots of layers, lots of parties involved, datagram, virtual circuits…

1980

OSI reference model is published as an international standard in 1984. There were individual standards for:

- transport protocols

- electronic mail

- network management

- etc.

Meanwhile, USA developed TCP/IP and it was adopted as the Internet Protocol in 1983. Odd enough, the TCP/IP group joined OSI in 1985 and they wanted to apply the layers of OSI to the TCP/IP model. Also they wanted to have all USA computers complying with OSI by 1990.

In 1989 OSI is still under development and there were many concerns from OSI people:

- Lots of money invested from companies, USA and European Community.

- Internet looked very attractive and it was already working (what was the point of OSI then?).

1990

In the mid-1990 it was clear that OSI was not happening. The main problems were:

- Too many interested parties

- Too much bureaucracy

- Too complex

Internet was adopted because:

- Internet standards were free, while OSI standards were not

- It was up and running (in USA)

- It also promoted openness

OSI was seen as an incomprehensible stardard but it did have some good points:

- It has a better architecture

- It was more complete

Proof of that is that in 1992 the routing layer of TCP/IP was modified according to OSI ideas. Nowadays, OSI is still being taught at the universities along with TCP/IP.

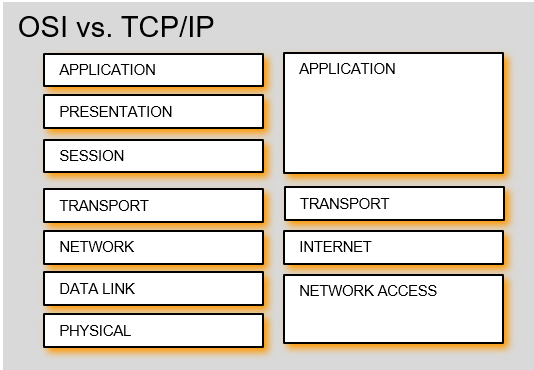

The complexity difference between the OSI and TCP/IP can be clearly seen in the following figure:

Final remarks

OSI was interested, but it became a monster difficult to tame. Finally, the practical and thorough approach of TCP/IP managed to make the Internet a reality. Summarizing: “OSI is a beautiful dream, TCP/IP is living it” [Russell13].

I would like to finish this post giving a thought to Pouzin’s French Packet Switching project from the early 70s. In a parallel universe the Internet is French 😉 .

Bibliography

[Russell13] “The Internet that wasn’t”, Andrew L. Russell, IEEE Spectrum, 2013

[Russell06] “Open Systems Interconnections (OSI) and the Internet”, Andrew L. Russell, IEEE Annals of the History of Computing, 2006

[Russell14] “Open Standards and the Digital Age: History, Ideology and Networks”, Andrew L. Russell,, Cambridge University Press, 2014

[Cerf74] “A Protocol for Packet Network Intercommunication”, V. Cerf, R. Kahn, IEEE Transactions on Communications, 1974